Interview

Disciplinary convergence opens

a whole new world

Jin-Ho Park, Professor at Department of Korean Language and Literature

Deep learning technology, in which neural networks learn on their own using massive datasets, has significantly reduced the role of linguists in developing AI language models such as speech recognition and machine translation. Now that AI language model development is facing increasing competition centered on economies of scale, Professor Jin-Ho Park argues that leveraging a linguistвҖҷs domain knowledge can curtail costs and shorten neural network training time, thus leading to efficient development. To prove this rationale, he became the first Korean language scholar in 2018 to jump into developing AI and successfully designed a deep learning-based Korean morpheme analyzer. We met Professor Park to discuss his initiative in developing useful and practical AI technologies while breaking down barriers between disciplines.

Q1

Could you familiarize us with вҖңnatural language processing,вҖқ a typical application of AI technology?

Natural language processing (NLP) analyzes the meaning of natural language that we use in our daily lives to facilitate computational processing. NLP is being widely used in speech recognition, translation, chatbot, and spam mail classification. The transformer neural network has been predominantly employed since 2017 in teaching computers to understand text for NLP. The most salient feature of the transformer model is the utilization of the attention mechanism. It works like this: The closer the relationship between words in a given sentence, the higher the attention scores, whereas the more distant the relationship, the lower the attention scores. In a nutshell, the neural network takes advantage of this mechanism to learn the context and meaning of a sentence.

Q2

How can a linguistвҖҷs domain knowledge be leveraged in developing AI language models?

In the attention mechanism, learning occurs in accordance with the previously described method to generate a sentence. The first word is created, the second word most related to the first word is created, and the third word that can be naturally connected to the preceding two words is then created. There are sentences, however, that do not follow this principle. For example, in the sentence, вҖңThe girl loved by many boys is my sister,вҖқ the verb вҖңisвҖқ refers to вҖңgirl,вҖқ which is far away, not вҖңboys,вҖқ which is right next to it. The attention mechanism randomly resets the initial parameter values1) to predict the next word. Therefore, with neural network training, it takes a long time for the most relevant words to be assigned high attention scores, while a massive data input and subsequent large amount of energy are needed for accurate analysis. However, linguists instantly ascertain this special case through sentence analysis because the attention mechanism is closely akin to syntax analysis in linguistics, which breaks down the constituents of a sentence and analyzes their relationships. Consequently, consulting linguists, instead of resetting parameter values, could yield initial values that approximate correct ones, thus significantly reducing the time required for neural network learning and the astronomical costs of developing AI.

Parameter: A variable that allows the values passed as factors to be used within a function when calling a function. It affects the operation of software or systems and can be understood as data input from the outside.

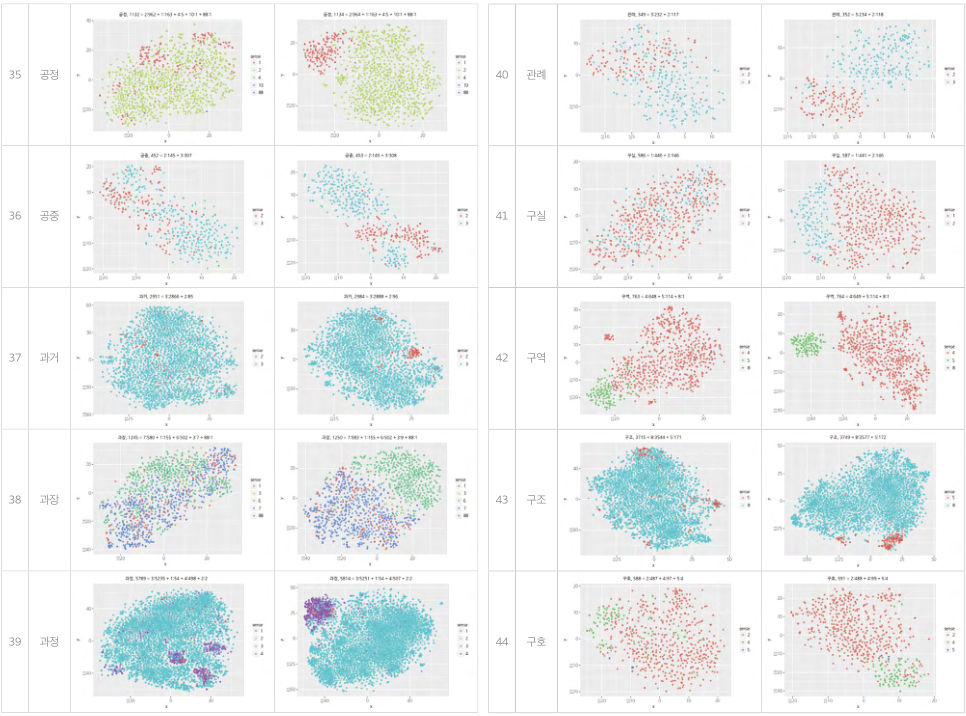

The results of normal vectorization of 10 homonyms (left) and the results of vectorization that factored in grammatical structures derived from syntax analysis (right). It can be seen that homonyms are better distinguished on the right side than on the left side.

Q3

Why do we need an AI language model tailored to the Korean language?

American companies take the lead in AI-related businesses, so other countries are forced to use the AI models developed by these companies and make modifications depending on their specific situations. The same holds true for language models. Yet, there are about 7,000 languages in the world, and each language has its unique systems and characteristics. In this respect, the chance is low that an English-based algorithm will seamlessly apply to other languages. Some may settle for a model with 90% accuracy, while others absolutely need a model with super high accuracy. Thus, it is time for Korean language scholars and engineers to collaborate on developing a language model with almost perfect performance to reflect the special features of the Korean language.

Q4

You started delving into software 20 years ago and currently engage in AI-related studies and research. Do you have any special memories?

In 1999, at the forefront of the digital age, I participated in the Korean informatization project designed to construct a Korean corpus and electronic dictionary. Despite the pivotal role of linguists in developing programs, both linguists and programmers suffered from a lack of communication due to their seemingly different disciplines. To overcome this dilemma, I taught myself C and C++ programming languages, which not only was intriguing but also enabled me to effectively interact with developers. It was 2018 when I seriously approached the field of artificial intelligence. At the time, I set my sights on creating one useful program rather than exploring all fields of computer science. In those days, works of medieval literature such as гҖҺSeokbosangjeolгҖҸ and гҖҺWolinseokboгҖҸ became digitized, but the search function did not work properly, causing great inconvenience. I created a program through which typing a keyword generates a list of all of the same keywords appearing in literary works and distributed it to my juniors and seniors as well as other faculty members around me. Although I spent many sleepless nights fixing errors and updating the program, I felt rewarded when they thanked me for helping them significantly reduce the time they spent on research papers.

Q5

What does it take to break down barriers between disciplines and promote inter-disciplinary convergence?

Compared to programming, linguistics bears some analogy to mathematics in that it has fixed rules unlike other disciplines in the humanities. Moreover, both programming and linguistics deal with вҖңlanguage.вҖқ Nevertheless, many students find programming classes demanding. Similar to my approach, I encourage students to refrain from pursuing the high-hanging fruit (which requires in-depth and extensive study) and instead approach other disciplines with a focus on setting and fulfilling a simple, yet definite goal. This way, they will become motivated and feel a sense of accomplishment. Fortunately, quite a few students have come to realize the importance of their exposure to non-major disciplines. I am also putting a lot of effort into devising ways to make my classes more engaging and effective, further inspiring the students to make the most of their studies.

Q6

Please tell us about your research plans down the road.

Reinforcing the presence of linguists in NLP research entails conclusive evidence that their linguistic knowledge enhances the performance of AI language models. The development of a deep learning-based Korean morpheme analyzer is only the first step toward a much wider world of natural language processing. As a linguist, I am determined to contribute to developing AI language models that embrace the traits and individuality of the Korean language and to cultivating the talent to facilitate the process.